Video Analysis

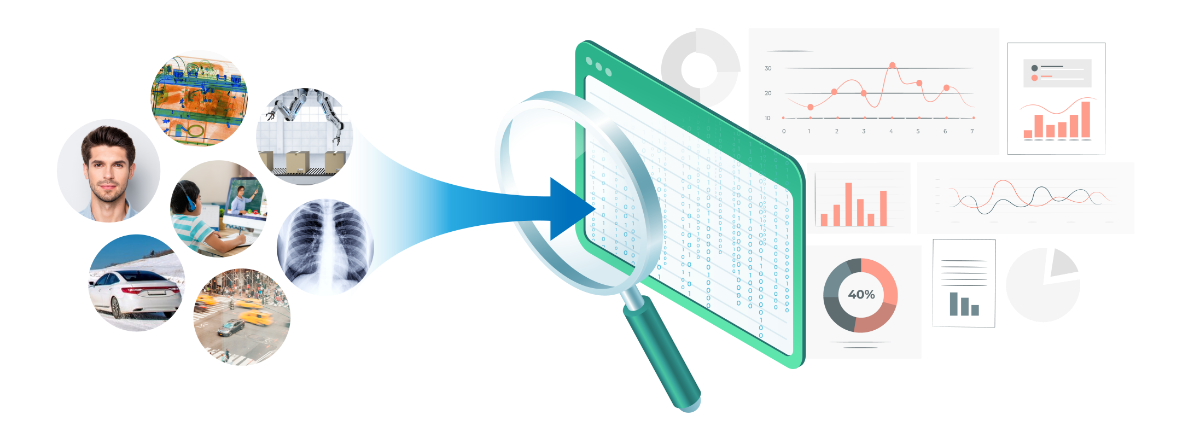

With the spread of AI technology, the identification and analysis work that used to take months to complete can now be provided with accurate answers within seconds by delivering sufficient historical data. In the process of transformation and upgrading, visual recognition is the most effective way to optimize the overall strategy on a large scale. However, according to statistics, less than 20% of enterprises have successfully implemented AI visual recognition. One of the main reasons for project failure is the poor recognition results due to poor mastery of the source video.

Must-Read Guide to Intelligent Imaging Solutions

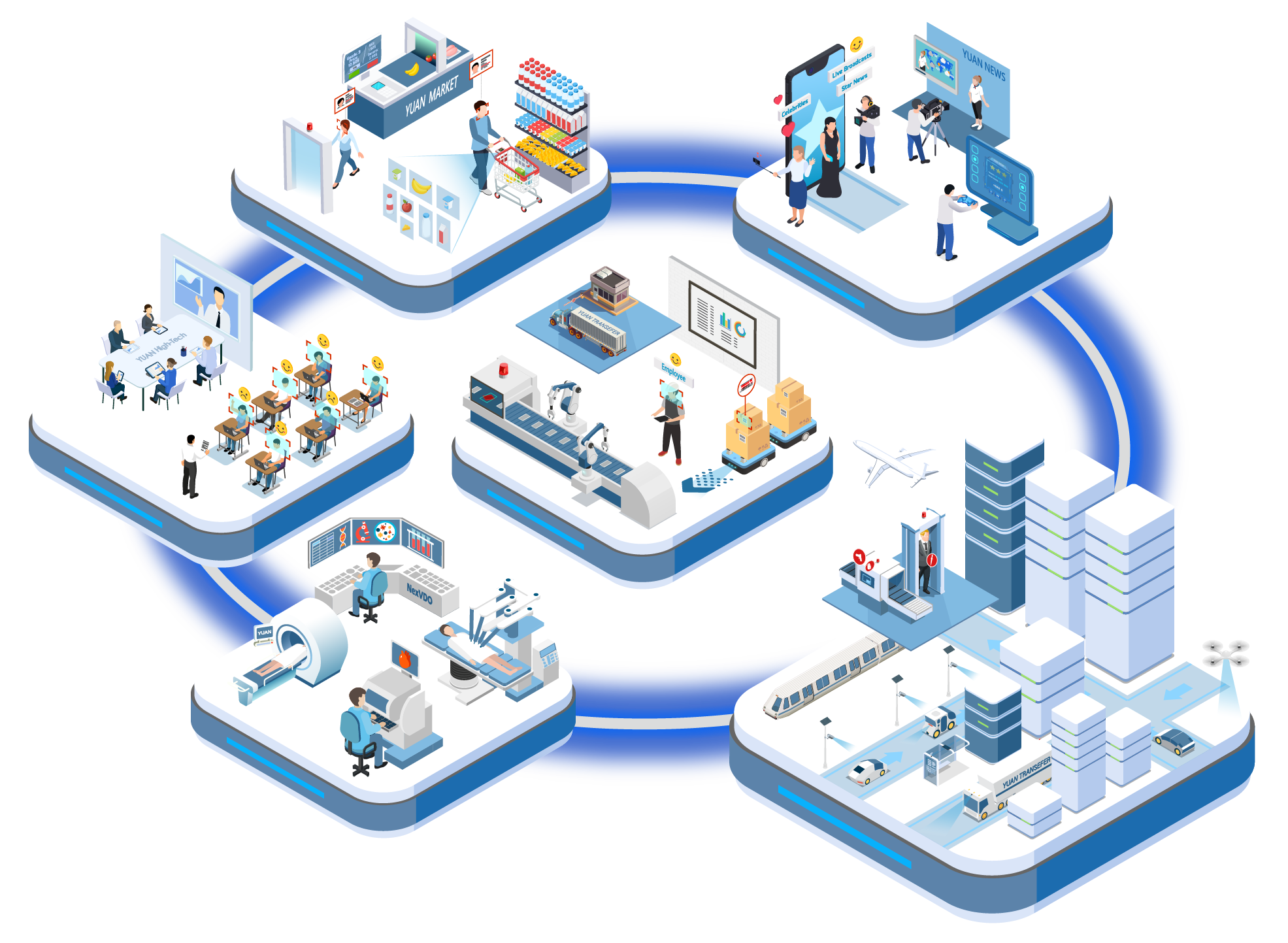

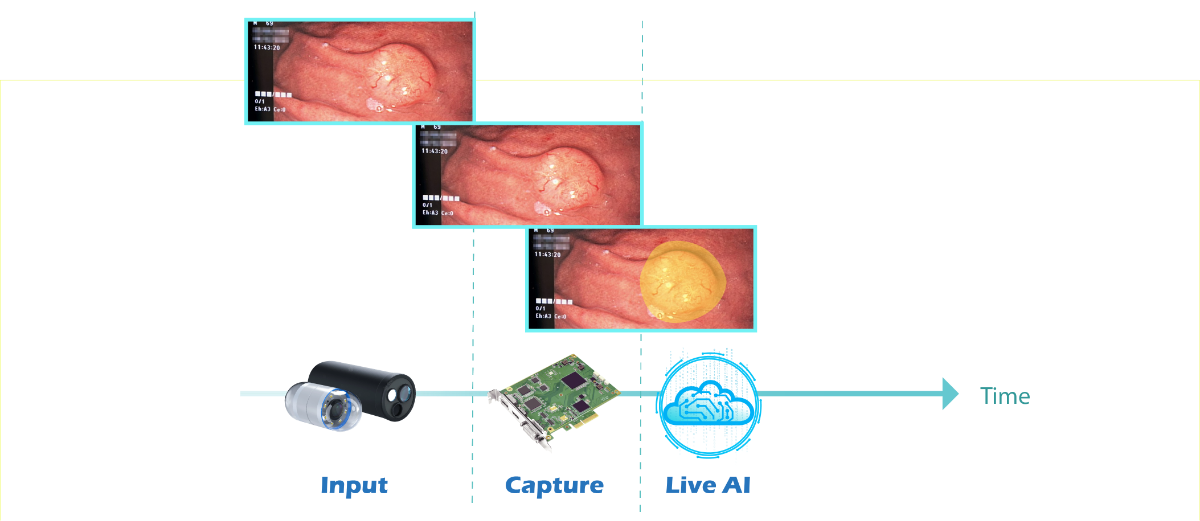

SI (System Integrator) uses two system architectures to implement video AI: local-site and the cloud to analyze video. The latter is to throw the captured images directly to cloud services like IBM, Microsoft, or Amazon with recognition capability and collect the analysis results through the network. The advantage is that different AI models have been deployed on the cloud, and developers can apply them directly.Nowadays, edge intelligence computing is not only just about the accuracy but more about real-time. Suppose you want to reduce the delay time to a level that the human eye cannot distinguish. In that case, the best way is to add a capture card to your computer to directly capture images and perform AI analysis to achieve the effect of real-time AI!

UAN has accumulated extensive industry knowledge and worked on various video problems for more than 30 years. We have been able to clarify and penetrate them as quickly as possible, building up a competitive edge that is hard to shake over time.Therefore, for those unable to develop AI on their own, YUAN can assist customers in aligning their original software intelligence with the industry through the dozens of video analysis models native to the NexVDO SDK and its rich industry experience.

Sometimes identifying the wrong analysis result is not a matter of algorithm but the quality of the source video.The NexVDO SDK has a video-core module that includes video capture, video recording, and streaming modules to fix this problem in the pre-processing stage.A user could standardize the size of the video in the pre-processing stage and the color space converted, allowing you to fully control the video quality and acquisition speed from the front end. The video can be directly overlaid with AI effects in the post-processing stage, making the original AI intelligent analysis software more real-time and efficient than ever!

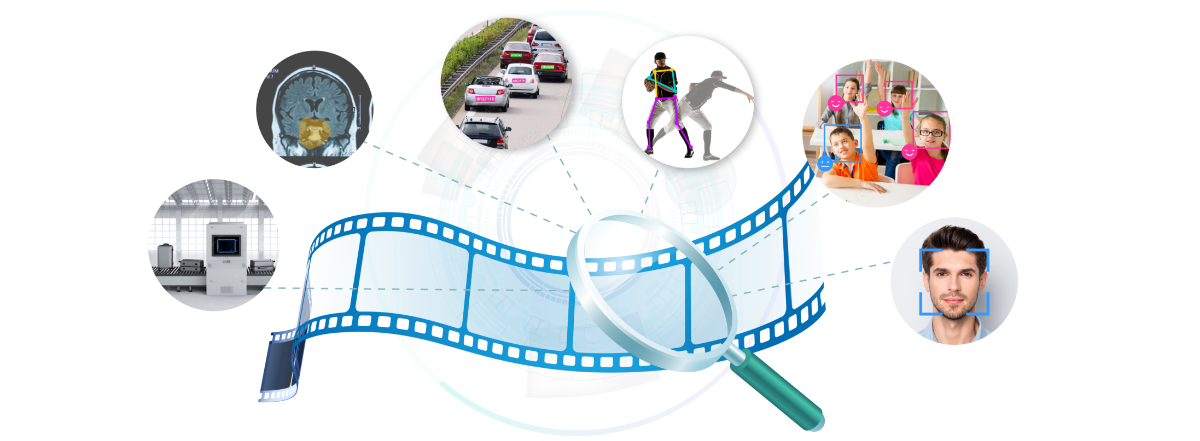

The NexVDO SDK separate into three modules for AI analysis: video content analysis, biometrics, and behavior analysis.

Type One - Video content analysis module

Humans care about what happened, not care about the video data.

Video content analysis can be divided into image segmentation and object detection technologies. For example, a user wants to detect whether a big car is a coupled car or a bus. In the video AI field, there is no one method that is better than the other, but rather it is a question of which one will bring faster results for the problem to be solved.After recognizing the objects, the next step is to make more applications. Currently, NexVDO SDK has three built-in modules for (1) classification counting, (2) feature matching (3) text recognition.

Typical applications are cars of the traffic flow and people counting, hot zone detection in shopping malls, and inventory in warehouses.In the medical industry, a doctor can use ultrasonic tumor detection.In the security industry, managers can surveillance search, cross-mirror tracking, and factory defect detection. Other applications such as smart parking license plate recognition and medical information digitization are in the field of text recognition.System developers also can use the NexVDO SDK to provide pedestrian and vehicle flow counting functions to solve industry-specific problems.In a real user case, the customer adjusts traffic light signal time by analyzing the location of vehicles and pedestrians through camera video erected at intersections. That the overall resources usage is more efficient than before.

Read more: THI and YUAN Collaborate to Create AI Intelligent Light SignsIn addition, YUAN is also working with customers in the medical industry to integrate the NexVDO SDK into AI workstation software to enable ultrasound tumor identification and provide doctors with real-time assistance in analyzing disease signs.

Read more: Advantech and YUAN join hands to realize remote medical AIType Two - Biometric Module

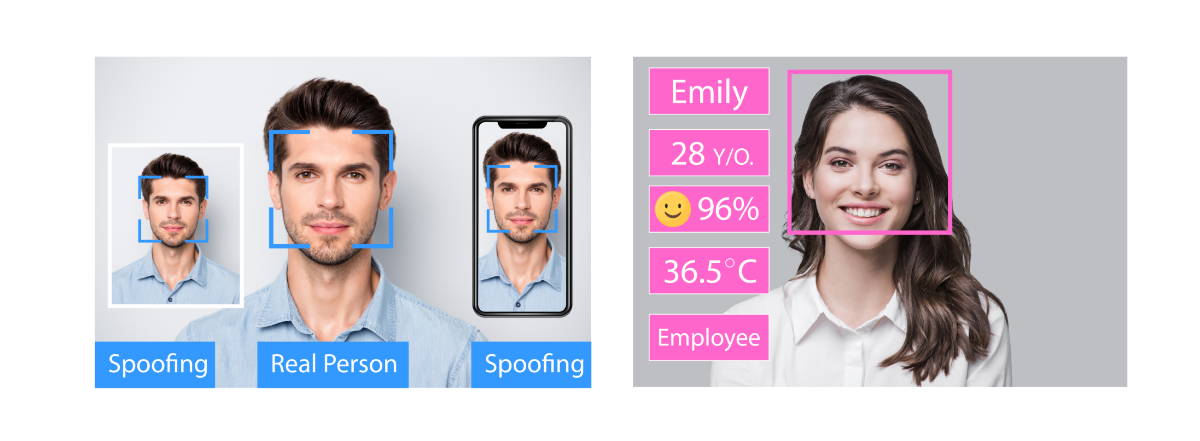

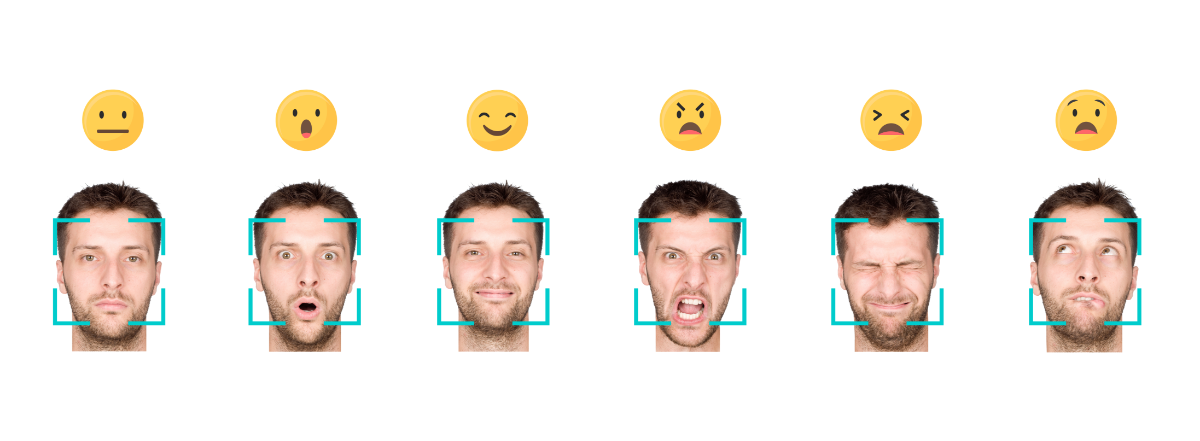

Used in situations where you want to know information about a face on the screen

First of all, it is essential to understand the application area of the face, whether to do face detection or face recognition?The NexVDO SDK provides a 1:N recognition rate for face detection and achieves a 99.8 recognition rate. Even if a human turns his head nearly 90 degrees, it can be accurately recognized.In addition, the NexVDO SDK also covers 68 3D feature points on the face to assist developers who want to implement face recognition applications, not only to recognize faces but also to refine the expression recognition further.

Read more: Why does the recognition rate drop when there are more features?

n the intelligent retail industry, it is often necessary to analyze potential customers' preferences.The expression analysis through the video collected by the digital signage allows the system to detect the age and gender of customers and analyze their perceptions. It is helpful for accurate advertising content delivery and a higher marketing conversion rate. In designing online teaching courses or distance learning, integrated expression analysis can help teachers quantify learning efficiency by analyzing students' status in the class.The NexVDO SDK provides a simple API interface ranging from universal face detection to precise expression recognition, allowing any industry to interface with whatever software development application they want quickly!

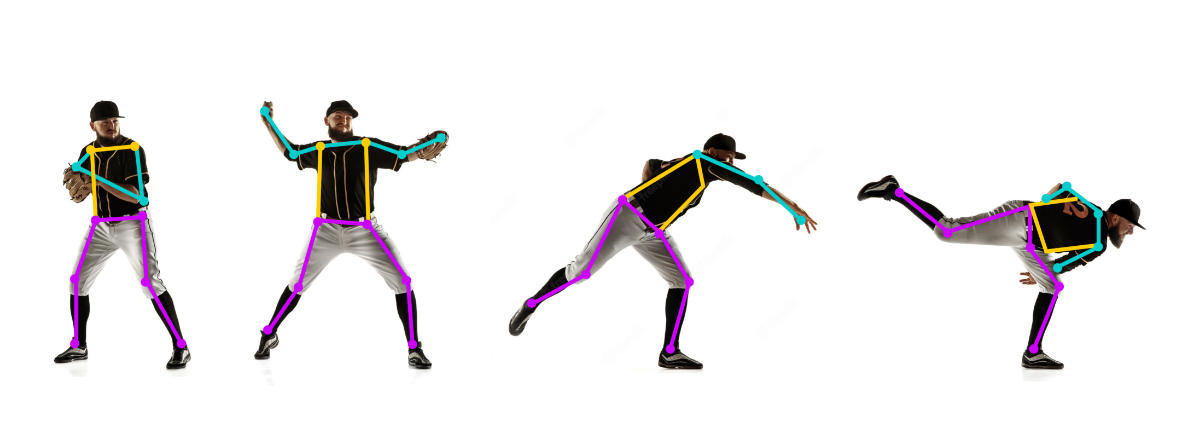

Type Three - Behavior Analysis Module.

Decompose human postures or behaviors on continuous images.

The NexVDO SDK identifies 17 key detection points on the human body from head to toe to help software developers identify human behavior in images, such as crossing hands, standing, sitting, lying down, raising hands, crossing... etc. When the behavior is combined with the stereoscopic spatial positioning of the continuous image, it can extend to identify more behaviors, such as: turning, falling, wandering, throwing... etc. Users can analyze different kinds of behaviors.

Extended reading: What is a skeleton critical point?Sometimes we want to analyze human posture and spatial positioning.For example, the coach wants to analyze a baseball pitcher for training through the 1080p240 high-speed video capture card. Studying every image of capturing through the NexVDO SDK provides the critical points of the skeleton for posture analysis, based on the quantified data can lead them to better performance! In the long-term care center of the hospital, in the past, elders were required to wear smart bracelets. Right now, we can do zero-touch identification and analysis through NexVDO SDK.

In addition, YUAN is also working with educators to create so-called intelligent classroom applications. Classroom teaching materials are combined with camera images to synchronize images of teacher-student interactions in the classroom and analyze students' various behaviors in the school through gestures. Teachers can track cameras in real-time if they move.

Read more: YUAN and IBase join hands to create intelligent classrooms with AIAs you can see, video AI analysis has brought about tremendous changes in different industries. The NexVDO SDK has already fully integrated with NVIDIA® TensorRT™ and Intel® Distribution of OpenVINO™ Toolkit, through which the kit can help more effectively. It allows developers to shorten the development time from image acquisition to intelligent analysis at the speed of light so that you can see the images and identify the results simultaneously!